Academic Realities: Lessons Learnt at NeurIPS

I published my thesis at NeurIPS but found something other than inspiration

Welcome to the 4 new subscribers since my last email 👋🏼

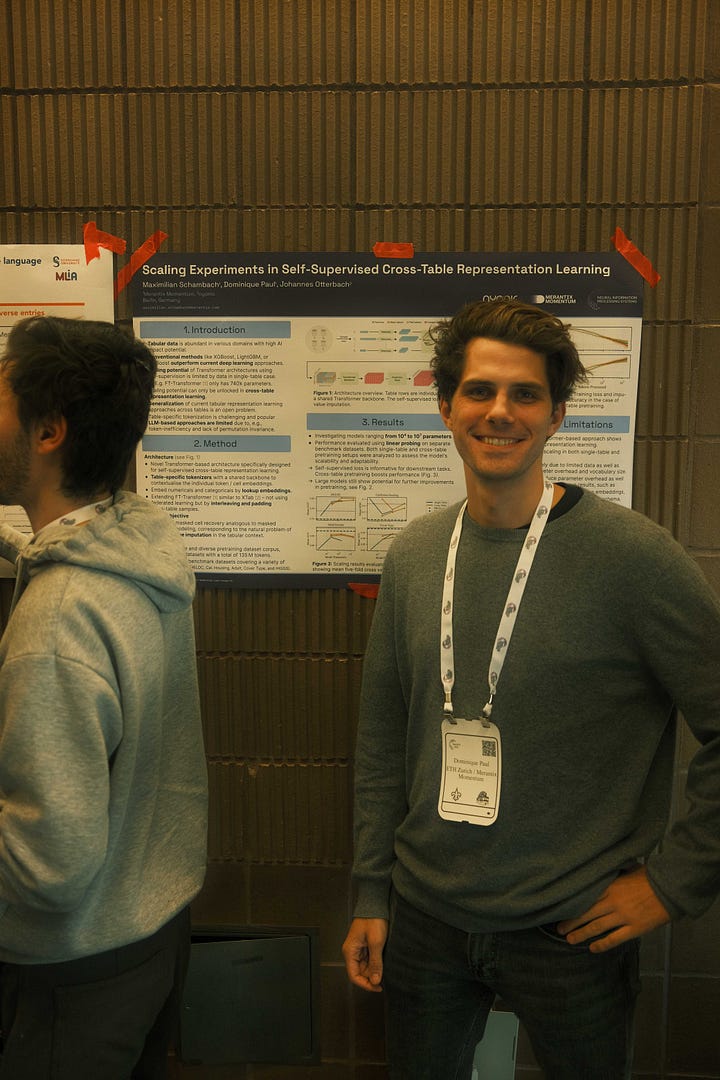

NeurIPS, ICML, and ICLR are the big three machine learning conferences. If you're eyeing a PhD or jockeying for a faculty spot, a full-track paper here - or ideally multiple - is what you want. I'm not looking for either but was very lucky to publish my master's thesis as a workshop paper. Full-track papers are for completed work. Workshops are kind of like full-track papers, but with the progress bar at 50-70% and grouped by topic.

I was proud to make it there with my master's thesis. Actually, I was pretty excited. And originally, I was planning on making this whole email about "What it's like at one of the big ML conferences". The cool things I would see and the impressive people that I’d meet.

But the conference was different from what I had anticipated.

Instead, the strongest memory I left with was an interaction on the second last day. Strong because it connects with my start at ETH and reminds me of what matters going forward. More on that in a second.

The backdrop

I started my master's degree with a feeling of inadequacy. I, the guy with the business bachelor's was sitting between the girl with a theoretical mathematics undergrad and the guy who won a gold medal at the international math Olympiad, while I was deciphering our first problem set that looked more like Greek alphabet soup than anything else.

But fast-forward through a blur of problem sets and Latex errors, and the imposter syndrome was dissipating. For one I noticed how some of the people I admired for their math skills were struggling with the other aspects of the bigger optimisation problem called "life". And for another, I was actually getting pretty good at math. I noticed that I wasn't the only one struggling with the courses. What determined success wasn't talent but time invested.

The big thing I learnt at ETH was that I could do any course, I can learn anything I want to. But it's a factor of time. What really matters is understanding for myself what's worth going for in the limited time I have in life. And a big part of that is dissecting my feelings from the status tokens in my social environment.

Do I really want to take Foundations of Reinforcement Learning because I'm interested in RL? Or is it the allure of conquering a notoriously tough course at ETH to impress peers and silence my self-doubt?

I am proud that my master's thesis was published as a workshop paper at NeurIPS. It's cool, but just like grades at ETH, publishing something at NeurIPS is a fuzzy signal: If that's what you're optimising for then there are many ways to get there. If your workshop isn't about a hyped topic - like in our case foundation models for tabular data - the bar is a bit lower.

In the end, the biggest value of my paper has come through the internal reflection that it has triggered.

I never ever would have thought that I'd be here one day. So for one, this is a reminder that no matter how distant a goal might appear, you can fucking do it. But now that I am here I question whether this really is something I want to strive for or whether what really triggered me was striving for a status symbol defined by my ETH peer group. I consider myself very lucky to be standing here though. It makes me feel like I could go much further in research. But it also gives me the final certainty that it’s not what I want. Sometimes the fact that you think you can do something is enough for you not to want it anymore.

Now, I want to spend more time on skills that are low in status and rich in utility.

My NeurIPS Scoop

Before I move on to the story, here is a condensed version of what I originally thought would fill this email. The idea, by the way, was inspired by Alexander Koenig's very cool CVPR recap talk. I wouldn’t have thought that mine would be so different.

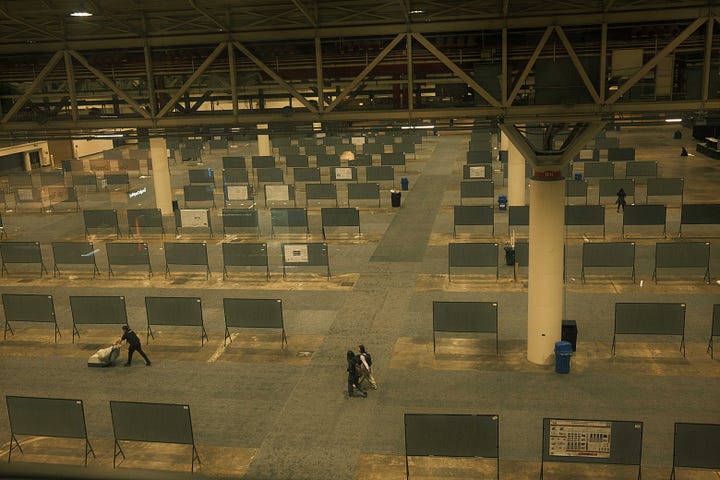

1. The Anti-Conference Conference

NeurIPS was the most down-to-earth place I've been to. Previous conferences I've attended felt like Broadway shows. NeurIPS? More like a minimalist art exhibit. Sparse halls, a single stage, a lone screen. No frills. The picture of the poster hall (which is 10 times the size of what you see in the picture above) visualises this best. The focus is clearly on the content.

2. Great place to learn, bad place to socialise

It's a great place to talk about research, but it's hard to strike up conversations beyond that. The usual conversation starter tricks didn't quite do their job for me at NeurIPS. "Hi, I'm Dominique, [bla bla my paper], so what do you work on?" - "Oh hi, so I actually work on the generalization error of stochastic mirror descent for quadratically-bounded losses.

Try finding a follow-up question for that one.

There are a lot of parties every evening, but you can tell that it's a conference with 15,530 computer scientists who have spent a lot of their time in their 20s solving equations. Some exceptions exist though and similar to my ETH experience the cool people you find are so much cooler than you'd find anywhere else.

3. Navigate with Purpose

Only go if you know what you're looking for.

NeurIPS wasn't a fit for my non-PhD trajectory. Diving into unfamiliar domains left me adrift in a sea of niche inquiries. There aren't any review-paper-style talks that summarise a field. If you don't already have a background in the topic being discussed it's hard to catch up.

The real value lies in connecting with the authors, but that requires a targeted approach. With 3218 full-track papers published (acceptance rate 26%) and 58 workshops, the chances of just bumping into the right person are slim. Aimless wandering is not an option.

My lesson - should I go again: I should do my homework. Pick my topics, read the interesting papers up-front and seek out the humans behind the research (h/t to Rike for putting this on point).

Now, finally, for the story that I mentioned above.

The Memory That Stuck

It was the penultimate day of NeurIPS, and my brain was fizzling out like a cheap sparkler on New Year's Eve. The academic talks were a marathon, and my mental sneakers were worn down to the soles. I was on a - thus far unsuccessful - quest to find more entrepreneurially spirited researchers or at least people who also worked on their own ideas via open source.

That morning, while enduring a presentation in the table representation workshop, I came across this tweet:

Context: Div is the host of "Transformers United", a lecture series on transformer and LLM research. While I followed last year’s edition it felt like TED Talks but every speaker is a machine learning heavyweight, and instead of inspirational quotes, you get layers and layers of transformers. I highly recommend the series to all of my ML friends.

Div had by now founded an autonomous agents start-up and was in theory exactly the kind of profile I was on the lookout for. But I kept scrolling for a bit as I checked out his Twitter and landed on a tweet that sparked an irrational sense of insecurity.

Here was Div who appeared like a machine learning grandmaster, leagues ahead, while I felt like I was doing the equivalent of learning basic chess openings. Cue internal unease.

I shuffled into Div's talk, expectations high. It started great, but then came the demo: the world's first autonomously booked flight. Pretty cool, right? But Div couldn't find the video file for two minutes and when he did the entire audience was squinting at a miniscule font. Something cool was happening in front of our eyes, but none of us could see it, and so we jointly marinated in two minutes of silence.

Div powered through, but the energy was fading. Content: Great. Delivery: Not so much. Maybe this underwhelming presentation style was just the conference vibe. Had I simply come with the wrong expectations?

But it wasn't just me struggling to focus. The back of the hall was becoming a bustling marketplace of posters. The audience's attention was being diverted with little resistance. I look to my right and find my neighbour deep in a game of chess on his phone.

Dissonance was spreading in my head.

Half an hour ago, Div felt like an ML role model. But now I was watching this role model struggling for words to explain his own work.

Post-talk, I approached Div to thank him for sharing the lecture series on YouTube. Two engineers, Cristin and Dhiraj from an AI start-up sidled up, their confidence outshining their introductions. They had a PhD glow, and their apparent disinterest in me was the apparent stamp of coolness. I introduced myself with (European?) modesty, while Div's gaze flitted about, distracted.

Then, an OpenAI engineer joined our circle. Young, possibly brilliant, definitely nerdy. Attention shifted to him, but his responses to questions from the others were more "um" than "aha." After two minutes the conversation loops back to the much-tredded drama of Altman's attempted ousting. This sensationalism is the most interesting we have to talk about? I don't know any OpenAI engineers. I didn't have crazy expectations, but definitely more than this. He was just a regular guy, indistinguishable from my ETH friends.

The Pedestal Illusion

I went to NeurIPS with high expectations thinking that this is where I'd find the superlative of the ML crowd that I had been working to be part of for the last three years. I thought that the conference would be a place that would remind me of how much there is left to learn and how far I have yet to go while also providing ideas for what to pursue next.

Instead, I was reminded of what academic realities more often than not look like. While the collective intelligence of researchers is astounding, the area of expertise of the individuals in this crowd is often focussed on a very specific niche. Integrating distant domains - something I enjoy a lot - is a rare exception in academia. And few people are interested in areas outside of their research. That's ok and probably also what enables many to excel at their research.

NeurIPS also reminded me of the differences in perceptions that are created by online appearances and how they can contrast with real life. I shouldn't be placing online personalities on pedestals. Div is surely a smart guy, but he's probably less of a time traveller than I gave him credit for.

There is much left to be done and built-in machine learning. While a proper academic background is necessary to build the software of the future, too much of it is actually counterproductive. Researchers are the ones who will be making the breakthroughs but after NeurIPS I feel like they are less likely to be leading the change than I thought before.

I am happy that I went to NeurIPS to make this experience (thank you Merantix for the support) and leave with an even stronger conviction that the combination of strong technical ML knowledge and being a person with a vision beyond research is incredibly rare. I already thought so while at ETH, but ascribed it to ETH’s emphasis on academic, not practical, excellence.

And I now more than ever believe that keeping your head down for several months or a year and focussing on not just research papers but also on working with and understanding applied tools such as Langchain, Replicate or Hugging Face will take you very far and strongly differentiate your capability to contribute something uniquely meaningful.

Most of all if you are capable of defining for yourself what’s worth contributing to.

Top Reads

1 - Mistral released the paper for its 8x7B model

The model was published in December and is as it seems the best open-source model out there and better than GPT-3.5 and Llama-v2. It’s very interesting to get a paper that explains how these models were trained making this very interesting. Mistral uses a sparse mixture of experts architecture, which just means that it has a large amount of weights, but only uses a subset per prediction - but knows which ones to use for each input. (Arxiv)

+ Gemini, Google’s new answer to OpenAI, apparently beats GPT4 on 30/32 tests. (Deepmind)

+ This 5-minute Fireship video, however, explains how the Gemini demo is kind of fake (Youtube)

2 - Generative AI products are in their second Act: the prominence of AI in products stepping into the background

Current AI apps are popular but not very sticky. The median gen. AI app has a 14% DAU/MAU - great consumer apps have 60%. New apps will be stickier, build workflows, be stickier and be multi-modal. (Sequoia)

+ Excessive purchases of GPUs mean that AI compute will become cheaper (Sequoia)

3 - 50 AI companies received a grant from the Gates Foundation. Most are medical LLM applications.

The theme is “equitable AI” and the grant recipients get up to $100K. (GF Grand Challenges)

+ This slideshow presents 17 selected projects (GF Grand Challenges)

4 - This service makes it cheap to buy roaming data outside of the EU

We are using this to purchase phone data abroad. Much cheaper than buying a US SIM card or buying a travel data package from my German telco. (Better Roaming; h/t Mona)

5 - Langchain’s state of AI report

Stackoverflow has an extraordinary poll on developer trends. It does not include AI tools explicitly. This report is a miniature version but covers most popular models, vector database and use case. (LangChain blog)

+ A comparison and guide for which vector database you should use (Vectorview)

6 - The Africas - A Sony Camera feature film with beautiful videos from the continent (Sony via Youtube)