Post-SF update: Yes, robotics!

A hackerhouse in SF, a chess playing robot, conversations with 50+ roboticists and a clear idea what's next.

Welcome to 35 new subscribers since my last email 👋🏼

End of May, I went to SF with the hypothesis that robotics might be what I’d want to work on for the next few years. I had been working on robotics for just three weeks when I left. I didn’t know much yet. Was I just romanticising the idea?

Something like that has happened to me before. I was once fascinated by genomics, then briefly by container logistics. Both turned out not to be what I thought. Now, my most limited resource isn’t energy or ideas, but time. And time, for me, is currently measured in savings. €50,000 at the time of my last post, €36,300 now. Having a wrong hypothesis is ok, taking too long to figure out it’s wrong isn’t.

The big unknown in robotics is the state of the technology. This field is not as mature as LLMs are. But how far away is it? Hard to say. One thing I’ve repeatedly learnt is that videos on X and LinkedIn are definitely not a good proxy.

On May 23rd, I put together a 30-day plan with three goals:

Talk to 30 roboticists,

Develop a mental map of research by reading 10 papers, and

Build a robotic application.

In this post, I share what I learnt pursuing all three goals, review the SF hackerhouse I set up, and outline what’s next.

4 takeaways speaking to 50+ roboticists.

One of the things that struck me is how open the robotics community is. I spoke to people from places like Physical Intelligence, 1X, Formic, K Scale Labs, Hugging Face, Tesla, Sereact, Robco, and Agility Robotics. Condensed to its essence, here’s what I learnt.

Yes, robotics has accelerated dramatically in the last 18 months. Why? Mainly three reasons. (1) Vision: Visual understanding is key to a robotic ML model. The leap in VLM capabilities gives us very strong pretrained perception backbones we can piggyback on, as opposed to training a visual understanding in a robotics model from scratch. (2) Data: Robotics has always suffered from data scarcity. There’s no “internet of robotics” you can train on. But it turns out that training across robot types - different arms, shapes, and embodiments - actually improves performance on a specific robot, as shown by RT-X. That wasn’t obvious, and it changes the equation. (3) Capital: A few years ago, hardware and robotics were pariahs to most VCs. Now the AI excitement has spilled over, bringing money, which brings talent, which drives progress, which brings more money.

You don’t need to be a mechanical engineer to build in robotics. I only realised this at a hackathon in Paris. Hardware can always improve, but the real bottleneck is manipulation. And teaching robots to grasp and interact with the world is a pure ML problem. Once I saw that, robotics looked less like hardware and more like AI.

Demos ≠ Reality: Many demos are misleading: What’s shown may work once, but won’t necessarily work on the second attempt. A robot ironing a shirt in a demo doesn’t mean it can fetch the ironing board or lay the shirt in place. Virtually no company has an imitation learning model deployed with customers beyond a POC. The core issue is reliability: a system that works 90 or even 95% of the time has very low business value if it needs human intervention every 15 minutes. I met two previously exited founders, who, excited by videos, had ventured into robotics, only to make a U-turn once they realised the gap. But that gap is also where the opportunity is. If you can make AI-based robotics reliable, everything else follows.

Humanoids are further away than most people want to admit. The next 5 years are all about AI-based robotic arms. From my conversations and the real robotics I’ve seen - not demos online - I don’t see humanoids doing practical work for the next 5 years1. Manipulating objects with a gripper and a fixed base is already very hard; it’s seven degrees of freedom. Add a robotic hand, and suddenly you’re at twenty-three. Add legs, and the complexity grows.

Papers

I read 10 papers in the first month and 30+ by now. I’ll eventually make a post reviewing the literature, but the last two years of robotic manipulation research essentially boil down to three key ideas:

Diffusion policies (what we use for image gen) are key to modelling robotics’ inherently multi-modal behaviour.

Pretrained VLM models can be used for robotics, giving us strong perception out of the box, so we don’t need to train them from scratch.

Cross-robot training outperforms single-robot training: robots generalise better when they learn across form factors.

If you want to dive into the research, these are the top 5 papers I recommend starting with to get a grasp of the big ideas:

ALOHA/ACT: The landmark paper for learning a single task from scratch with two arms using just 50 demonstrations. I use ACT as the baseline model for all tasks I train.

Diffusion Policy: The first paper to apply diffusion models to robotics. This has now become standard.

ALOHA Unleashed: Combined the ideas of ALOHA and diffusion into one model and scaled up data from 50 samples per task to 8,000+. What surprised me the most and made this paper so interesting: scaling up data alone is not enough to achieve more than a 95% success rate.

RT-X & Open X-Embodiment: Google aggregated and open-sourced the largest robotics dataset to date. For the first time, someone showed that cross-training on different robot embodiments and tasks works better than a model just trained on a single robot embodiment.

π0 & π0.5: The currently strongest models, combining many of the ideas above and more.

My chess robot journey

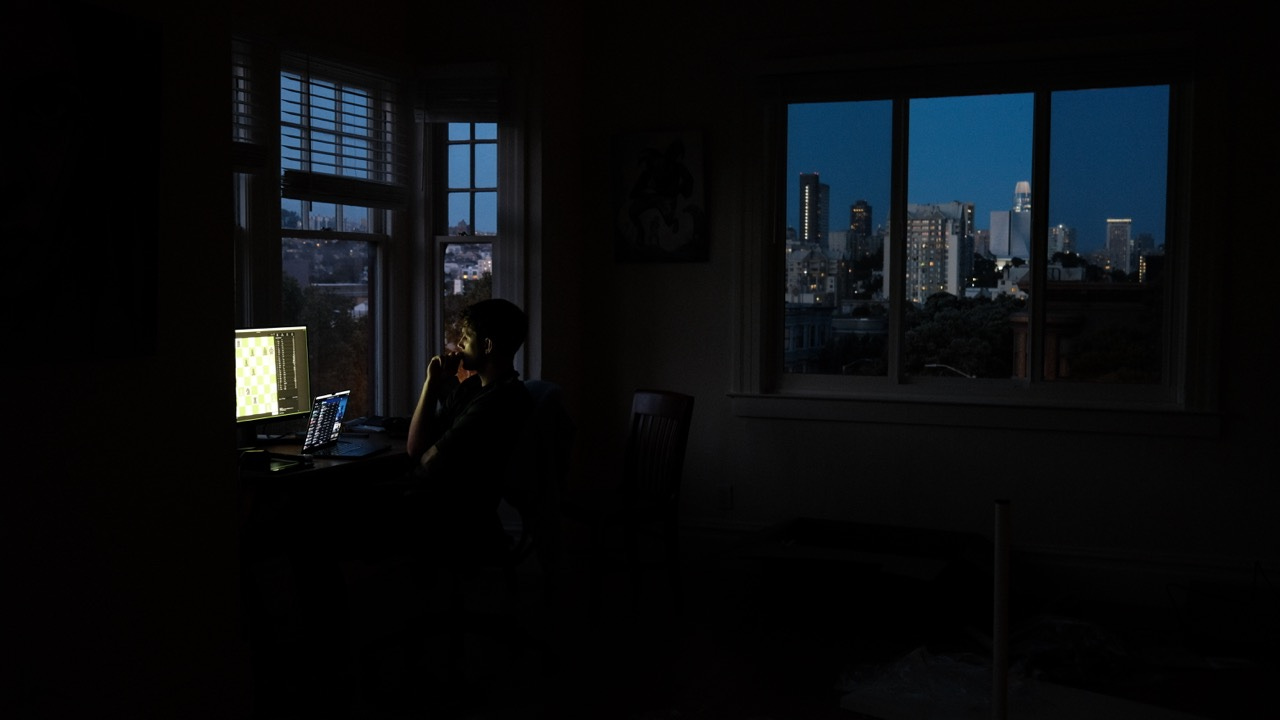

Reading and talking gave me a sense of where the field is at. But the real learning came from building. My goal: a robotic arm I could play chess against.

It took much longer than I expected, but I kept learning, and since nothing contradicted my initial hypothesis - that robotics is worth working on for the coming years - I kept going. In the end, I left SF without a working robot (foreshadowing), and Chesso, my little robot, ate up ~70% of my time in the city. Here’s what I built, where I went wrong, and what I learnt.

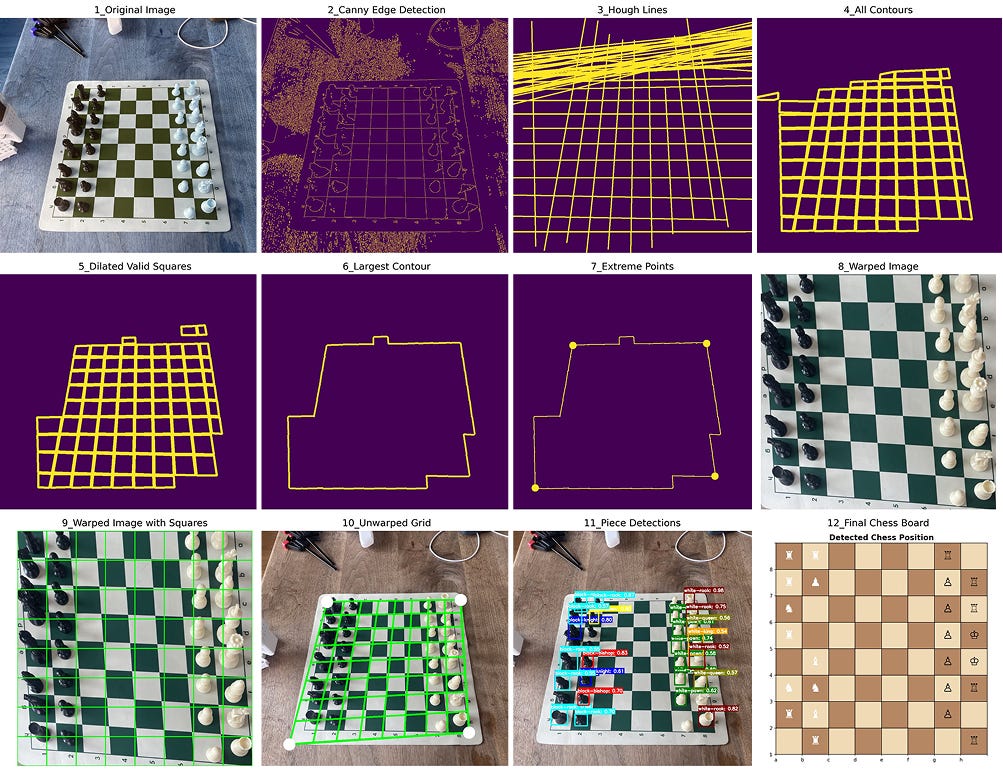

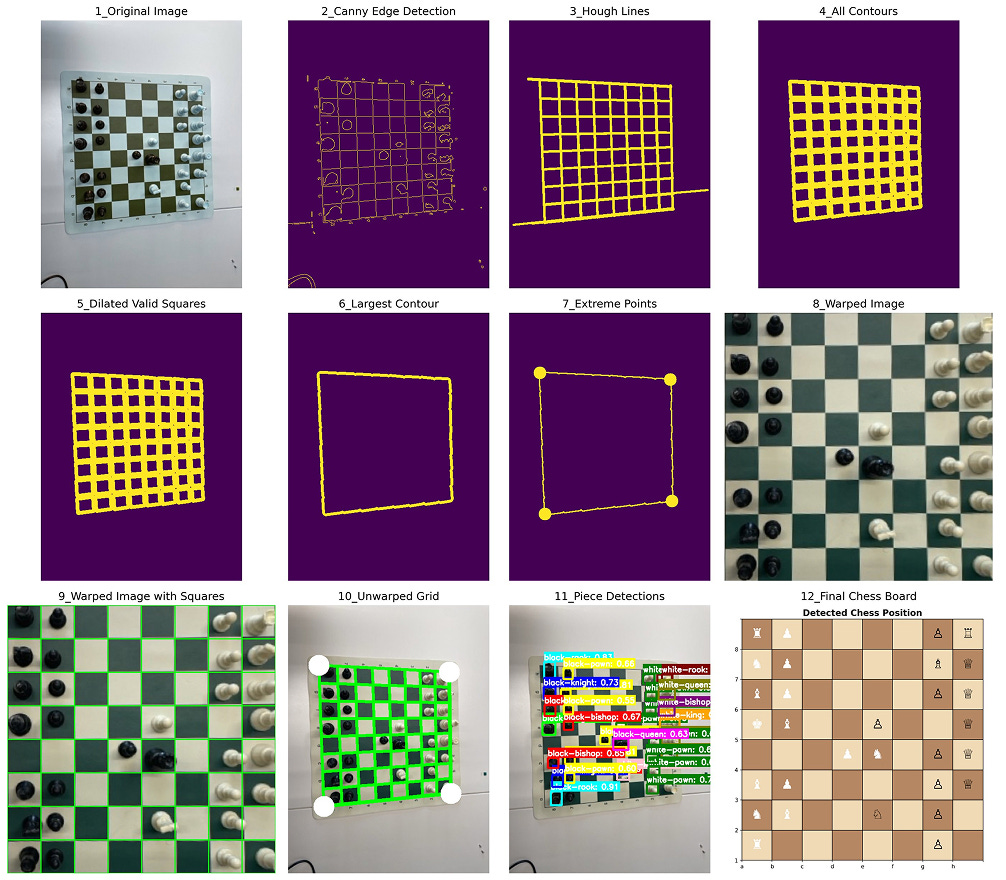

Part 1: Reading out the chessboard position from an image

Before the robot could move a piece, the app needed to understand the current board position. That broke into two subproblems:

Find the board in the image.

Identify which piece is where.

Mapping pieces to squares - this part is easy if the solutions to (1) and (2) work well.

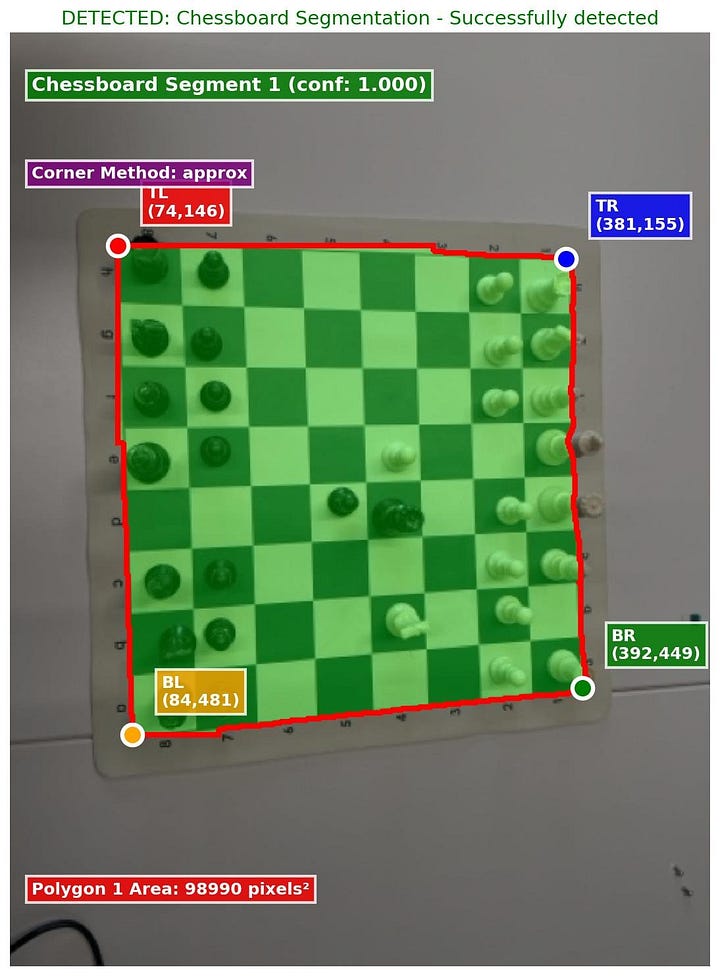

I figured chessboard detection had to be a solved problem in classic computer vision. Turns out, no. I tried this GitHub repo with OpenCV. It worked at some angles, but fell apart if I nudged the board. After three days of trying, I reached for the ML sledgehammer 🔨.

I trained a YOLO-based segmentation model, with which I could then crop and warp the board, overlay an 8×8 grid, and map each square. Then I added an object detection model for the pieces and voilà: the current position. I could feed that into a chess engine (Stockfish) to predict the next move. The only thing left was for the robot to execute the move.

Part 2: Moving the chess pieces with the robot.

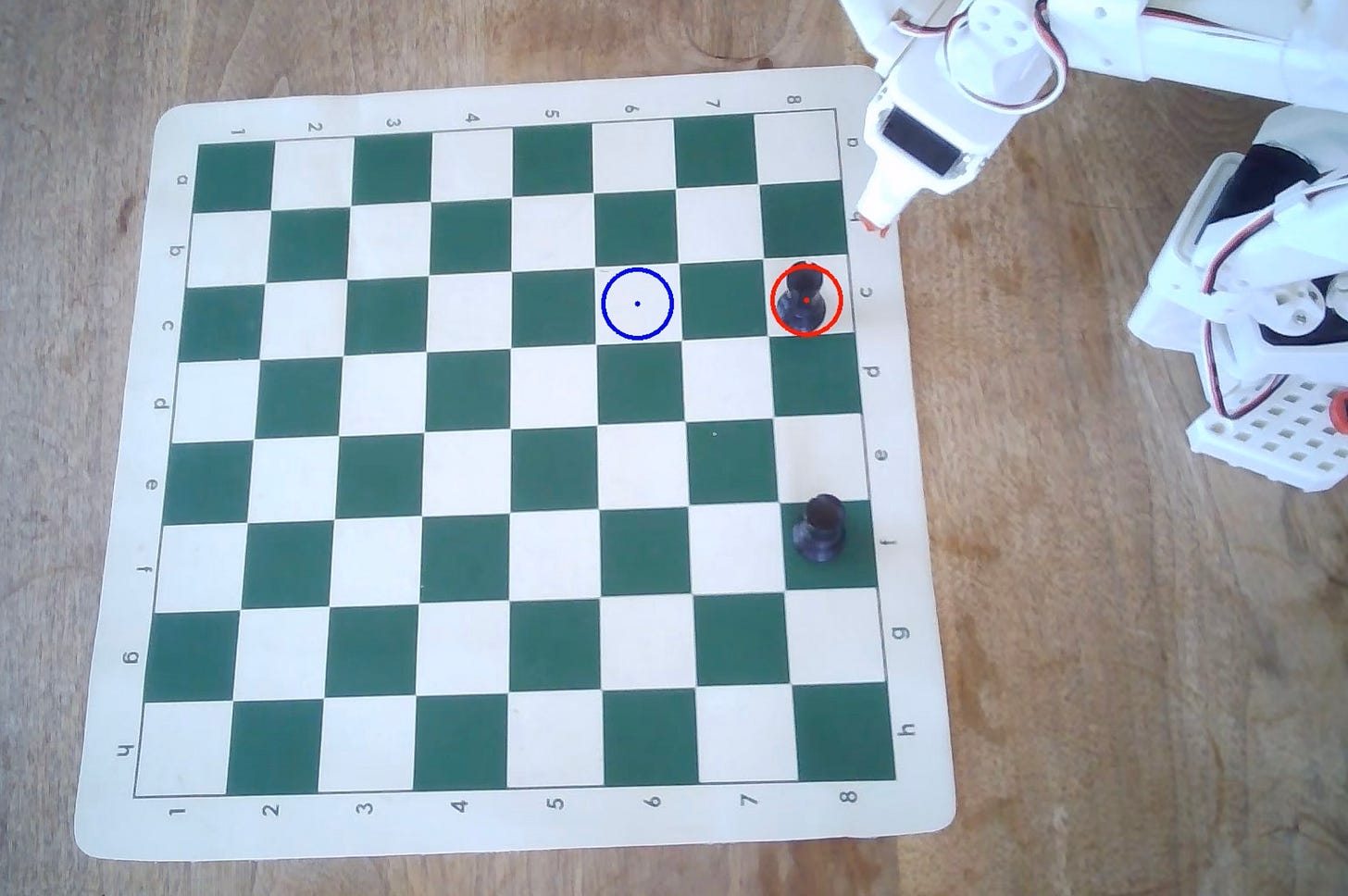

I wanted to test different models. Imitation learning policies (like ACT) are trained on repeating the same task across varying positions or objects with only visual input. Others (like VLAs) also take text instructions, but “move the rook from G7 to G2” didn’t feel reliable. I wanted visual cues only, for one, because it made more intuitive sense to me, and for another, to ensure compatibility of the data collected with all models.

So I devised the following: overlay two circles on the context camera feed:

Red circle = piece to move

Blue circle = target square

First test: Does the model understand?

I trained ACT on 100 examples: a board with just two rooks, moving one forward.

Result: yes! The arm always reached for the right piece. The success rate for the full movement was 24% (6/30). Often it missed the piece by a few cm, gripping air and then moving to the target square with an empty hand. Probably the dataset size.

Curious what the data for a task like this looks like? You can explore it online here.

Scaling up: 500 episodes

Next, I collected 500 “random” moves from grandmaster games. I varied black/white orientation, board positions, camera angles, and lighting (day vs evening). Data diversity: ✅.

But training ACT on this gave <5% success rate. Ouch. I wondered: maybe 500 examples weren’t enough? The ACT paper had 50, but always the same task. I was trying to teach all possible chess moves.

I found an Australian’s chess robot project. The video description said 1,500 episodes were needed. Aha! Not enough data was the problem. And so I collected another 1,500 episodes of data in the next 2.5 days.

But more data didn’t fix the problem

Even with those 1,500 samples, the robot still staggered around, moving in roughly the right direction but missing like a drunk man trying to fit a key into a lock.

Something was fundamentally off. Too few samples? Too much variation? Wrong model? I started to review everything:

My cheap €50 camera caused daylight samples to be highly overexposed. Poor visual data quality.

ACT is transformer-based and not great at multi-modal input. Maybe that?

Diffusion policies are apparently better at multi-modal input. Tried that. → nope.

Tried HuggingFace’s SmolVLA (pretrained on my exact robot model) → even worse.

Biggest problem: I had varied to many factors (camera positions, board positions, lighting)

And my laptop was hitting its limits. Inference was slower than execution, meaning the robot froze briefly yet visibly between frames. Then I found out training was slower than it should be because I hadn’t downsampled the video, so each forward pass was bloated with an image resize. Rookie mistake.

What to try next?

I saw three paths forward:

Fix everything in place (board, arm, camera). Recollect 1,500+ episodes. Retrain.

Human-in-the-loop interventions (requires the newer SO-101 arm).

Fine-tune a big model like π0 → but that’d need a Jetson or GPU for inference, way beyond my MacBook M3.

But the hackerhouse was ending, meaning even more changing lighting and environments. With some reluctance, I paused Chesso and shifted back to reading, talking, and absorbing more robotics.

Reflection

In a way, it felt like I had made every possible mistake you can make when training a robotic policy. A few years ago, I would have felt very stupid. But now I’m thinking that if the rate at which I was finding new mistakes meant anything, then probably only that I was moving pretty fast.

At ETH, I spent years with the sense of being behind, of not being smart enough to keep up. Back then, I thought that feeling was unique to me. Later, I realised it was universal - everyone felt the same way. In frontier fields, feeling stupid isn’t a personal failing; it’s simply what progress looks like. The real barrier isn’t knowledge - it’s persistence. Most people quit when that feeling doesn’t go away.

I saw how brittle these systems are to small changes (lighting, camera position, board alignment). This fragility isn’t just my obstacle; it’s the bottleneck for everyone else, too. Making these mistakes now - while the potential of robotics isn’t evident to others yet - feels like I’m gaining an early-mover advantage.

What surprised me the most was that even after a few days of things constantly failing, I was having a lot of fun and was constantly optimistic. Usually, repeated failure drives people out - as I saw with the two second-time founders who made a U-turn. Could this intrinsic interest and fun be a unique advantage I have here?

So while I left SF without a working chess robot, I also left with a very strong conviction that this is what I want to keep working on and that the difficulty itself is the opportunity. This isn’t just another AI agent that anyone can build in a weekend. The fact that so few people are willing to stay in the game is precisely what makes it so worth playing.

Learnings

Robotics is hard. Way harder than online videos make it look.

Saving money on cheap hardware will cost me time.

Lighting and camera positions matter a lot. Start with a fixed environment first, and add variation later.

Be aware of your data’s resolution and the model’s input. Downsample the video before training, or suffer.

SOTA models cannot run on my MacBook. Remote compute also doesn’t work - the robot has to run with local compute because of latency - I’ll need a proper graphics card.

Evaluating robot models is painfully slow. Even 30 episodes feels like forever, but less isn’t enough to detect small gains. And you need to rerun baselines under the same conditions.

Focus on the part with the highest uncertainty first (here, the chessboard detection).

Reliability is everything. Even a “99%” model means 60% success over a 50-move game because each move compounds the failure probability (0.99^50). That’s not deployable.

You can find the code for the project here. You might also be interested in checking out the daily blog I kept on what I was working on any given day (with some exceptions).2

Next up: Validating demand and identifying use cases.

Further above, I mentioned tech being the biggest risk. The second biggest risk is demand. With perfect tech, demand is guaranteed. But it will take years to get there. There will be a long time where the tech is somewhat working. So, is now the right time to start a company?

It’s a matter of time, so the only way to lose is by running out of money too early. Money can either come from VC funding or revenue. I don’t have the pedigree to raise a 400m pre-seed like Physical Intelligence’s team and only focus on research, but among all people capable of building robotic ML, I have a comparative advantage in selling. The way for me to start isn’t by exclusively focusing on research. It’s by using what already exists, adding an RL layer to it for high-reliability, and automating the first real use cases.

These are my goals for the rest of the year:

🥇Pre-sell a use case to an end user

Number 1 priority by a large margin. Ideally, I’d like to identify a simple application which is not feasible with traditional robotics and automate it. The way I imagine this to work is (1) talk to potential users, (2) identify an amenable application, (3) build a first demo by myself to show to the customer, (4) the customer likes the demo, I promise to build a deployable version within 6 months for a 25k prepayment.

🥈Learn more about and build robotic ML experience

To ensure that I can actually deliver on that promise, I need the capability to build such a demo. That’s why I’m reading papers, comparing what others are doing and - as soon as I have a fixed desk again - continue building.

🥉Share what I know and build a social media presence

Success doesn’t mean building a company worth a lot of money. It means creating a Europe where routine work is automated, and where we can defend our values with a competitive economy. No single company can do that alone. I want more people from ETH and other universities to start robotics companies, and that’s why I’ll be sharing my experiences and what I’m learning on X and Substack to encourage others.

I’m surprised more people don’t do this. The extra effort of sharing what you’ve done is small. You’ve already done the work. All that’s left is putting it into words and maybe finding a photo in your camera roll. I often think of the sentence:

If you’re chopping wood, collect the sawdust.

If you’re helping others learn about a given topic, then you’ll be perceived as someone who knows and understands that topic. In the short term, it may feel like unnecessary extra work, but over time, the value compounds, and you grow an audience. I think it’s a useful way to frame how helping others also helps you attract customers and hire smart people.

Co-founder? Yes! But not the #1 priority.

I think I’m most likely to find the best fit with a European co-founder, or at least someone with a similar personal background. That’s why I put the co-founder search in San Francisco on hold. Now that I’m back in Europe, I’m starting a few conversations and will make it a focus after my 1-month user outreach.

I’m looking for a co-founder with an even stronger research background than mine, ideally in reinforcement learning or robotic ML. We’ll be building the first versions together, then dividing focus: my co-founder driving the research to advance the core product, and I working with customers and leading on-site deployments.

If you know anyone who might be a fit, please reach out.

The hacker house was the best decision

The two biggest assets you can have in the early stage of building are your optimism and many hours of focused work per day, and this setup was ideal for both. We had a perfect mix of nationalities (3 Frenchies, 2 Germans, 1 American) and backgrounds (3 quant finance, 1 roboticist, 1 designer, and me).

3 Things I loved about it:

Work and social life blended. I could start working in the morning and still get real breaks and conversations in the afternoon, which was something I missed a lot working alone the last months.

Organising events was effortless. We were already six, so hosting was easy, and the house naturally attracted some incredible visitors because they could meet six people at the same time.

Being in a group of people I respect for their abilities and in the same founding stage normalised occasional doubts that can be a drain on your energy - even when you know they’re stupid - when you’re working alone.

2 things I’d do differently next time:

Weekly check-ins: Max pushed for this early on, but we let it slide. Regular check-ins would have added social accountability and made it easier to follow what everyone was working on week by week.

Demo day at the end: Even without a goal of raising money or shipping a finished product, a small demo day for friends would have been valuable. It would have pushed us to put in that extra evening hour and kept the standard higher. If you set out to make 25 customer calls but start doubting the point after 7, knowing you’ll have to present your work makes you much more likely to push through.

My Top Reads

Tradle: A game that works like Wordle, but you have to guess a country based on its trade balance. (Thank you to Pauline for showing me this)

Chelsea Finn’s talk on Physical Intelligence’s work at YC’s AI start-up school.

Andrej Karpathy also held a great talk at YC’s start-up school on his intuitions, abstractions and mental models he uses to think about AI.

Peter Holderrieth taught and shared a great course on diffusion models that I worked through. I found the first two lectures interesting, but from then on found the lecture notes more useful than the video lectures.

I enjoyed the book AI for Robotics by Alishba and Keerthana to get an overview of all subdomains of robotics (beyond manipulation): Lidar & point clouds, autonomous driving, navigation problems, simulation, and more.

The blog interestingly served as a useful directory of resources. I often found myself opening the blog to copy-paste and send a link plus my comment to a friend after a conversation. Summarising my thoughts did take 10-30 minutes per day, though. So while I’m not continuing it, I’d strongly consider doing it again for another single-goal sprint.

Cool journey and post. But do you think that looking for a European cofounder is just to find a likeminded person or also to start building and accelerating stuff in here? From your previous post it seemed like the gap between Europe and Asia/America is too significant to spend time, energy, and potential trying to bridge it... Has this view changed after your visit in SF? Or I read that wrong and the state of innovation in Europe had never discouraged you from building in here?